Despite measures by Microsoft to address the issue, consent phishing is still on the rise. When hunting for malicious OAuth apps, the most important things to look at are the permissions and reply URLs. Publisher verification status, or install count, are sometimes useful while other factors can be completely ignored.

Despite measures by Microsoft to address the issue, consent phishing is still on the rise. When hunting for malicious OAuth apps, the most important things to look at are the permissions and reply URLs. Publisher verification status, or install count, are sometimes useful while other factors can be completely ignored.

Despite measures by Microsoft to address the issue, consent phishing is still on the rise. (Not sure what consent phishing is? Read more here). Although prevention is best, how do you check this hasn’t already happened?

First, a bit of background on how OAuth apps work in Microsoft 365.

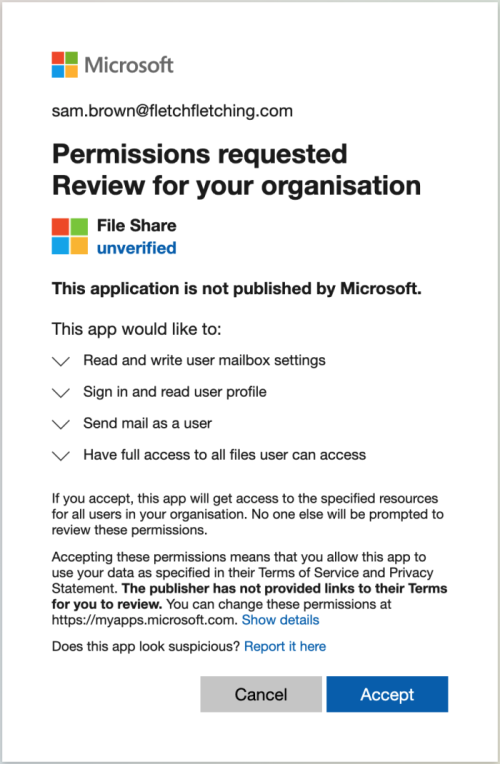

When you install an OAuth app in Microsoft 365, you see something like the familiar consent screen below, which shows the app's name and the permissions it's asking for. Once you've given your consent, behind the scenes a “service principal” is created in your tenant - this is your instance of the app. When the app does whatever the app is supposed to do (e.g. inspect your calendar, manage your to-do list etc.), it does it via this service principal.

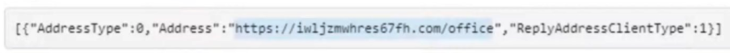

The app is able to authenticate to do this using a token that it is sent during the consent process. If you look closely at the URL you visit to get to the consent screen (example below), you’ll see there is a reply URL parameter - this is telling Microsoft where to send the token when a user consents:

https://login.microsoftonline.com/common/oauth2/v2.0/authorize?client_id=<client_id>&response_type=code&redirect_uri=https%3A%2F%pushsecurity.com &response_mode=query&scope=https%3A%2F%2Fgraph.microsoft.com%2F calendars.read%20https%3A%2F%2Fgraph.microsoft.com%2Fmail.send&state=12345

The app uses this token to authenticate as the service principal to then do whatever it’s supposed to do. In case your hacker brain is getting ahead of itself, you can’t change the reply URL to any old value to steal tokens. The app developer specifies a list of URLs that are allowed to be used here in the app’s manifest - more on that later.

Until recently, this ecosystem was a bit of a wild west. Although you can publish apps in the official app store, you don’t have to. Attackers were able to create an app on their tenant and then send consent URLs encouraging victims to grant them access, often having great success.

In October 2020, Microsoft released “Publisher verification”, allowing developers to be vetted by Microsoft and get a badge of approval on their consent screens. The following month, Microsoft changed policies so users, by default, weren't allowed to consent to apps that didn't come from a verified publisher. This makes a consent phishing attack much more difficult for attackers who are now left with the following options:

Find a tenant that allows users to consent to non-verified apps. The default should have been changed for all to not allow this but you can change it back (in case you’re curious, see how to check your own settings here).

Go through the publisher verification process anyway: the process is detailed here. It’s probably possible to trick but requires mocking a real company which is going to be expensive and hard to scale.

Compromise an already verified publisher: definitely adds cost and complexity to an attack but would be an extremely valuable and effective approach - how much do you trust the security of all your app publishers?

So let’s look for some malicious apps...

The Azure AD interface to inspect OAuth apps, or service principals, is the Enterprise Applications blade but it’s lacking key information you need for this exercise like the reply URLs and publisher status. You might be able to see similar info if you have the licenses for Cloud App Security but they’re expensive - you can also get full information about service principals from Graph API, or PowerShell (is it too early to say that Push can also solve this problem for you in only a few button clicks?)

Right off the bat, we can disregard a lot of the information presented by the app. The app’s name, home page, logo can all be anything an attacker says so if they’re trying to trick a user this will most likely look convincing and legitimate. The best you can do here is sanity check that this app makes sense in the context of your organisation or this user.

So what is useful?

What can the app do?

Start by prioritising apps by the permissions they’ve been granted. Attackers will often target access to mail, files, or admin functionality so any app that requests these should be subject to more scrutiny and looked at first. As with any security exercise, you’ll know best for what’s sensitive to your organisation so apply that logic here. If you are unsure what a specific permission means, here's a full reference.

Access to all data or just specific users?

It’s important to understand the difference between app permissions and delegated permissions. In short, app permissions grant tenant-wide access, delegated permissions grant access as the user. For example, if the app permission Mail.Read was granted to an app, it could read everyone’s email. If the delegated permission Mail.Read was granted to an app, it could only read the mail of the person who granted permission. Learn more about app vs. delegated permissions here.

How many users have installed this app?

If you are the victim of consent phishing, hopefully the attacker only managed to dupe a small number of users, so common advice would be prioritise apps with a low install count. Although this makes sense, it’s often not that practical since, unless you’ve been running a tight ship, you’ll probably find a lot of apps used by one or two people.

On the flip side, app permissions can only be approved by an admin; admins can also consent to delegated permissions on behalf of all users. So apps with these permissions - effectively tenant-wide access - have also probably been approved by only a single user. Hopefully you have more faith in your admins’ ability to spot a phish but you should still treat these as having only been vetted by a single user.

Where the tokens go - the thing you can’t spoof

The only piece of information an app can’t lie about is its reply URLs. As mentioned above, these are the URLs that Microsoft is allowed to send an access token to when a user consents. If the app publisher doesn’t own these domains, they won’t ever receive their token and they can’t use the app’s access. If you can confirm all the reply URLs specified by the app are legitimately owned by the organisation the app is supposed to be from, you can be fairly confident the app is owned by them.

In the interests of keeping this short(er), a guide on domain analysis is probably out of scope. However, here’s a real-world example malicious OAuth app that was pretending to be Salesforce related, using a pretty suspicious looking URL, so you won’t always need deep analysis:

Is it verified? Does it matter?

You might be tempted to trust any app that is verified by Microsoft. The stamp of verification is clearly worth something but, as mentioned earlier, don’t discount the possibility of a determined attacker compromising a verified publisher to publish their own malicious app or edit an existing one.

Likewise, you might also find a lot of your service principals, even ones by seemingly reputable publishers, are reported as not verified. This is because the service principal is an instance of the app at the time of install - if the publisher wasn’t verified at that point, the service principal won’t be (even if the publisher has since been verified). Since Microsoft only introduced publisher verification in 2020, all apps installed before this date will report as unverified. For reference, 78% of the service principals we’ve looked at report as having unverified publishers so this isn’t necessarily something to worry about.

If you find apps that look like they don't belong and you're worried they're the result of consent phishing, as well as removing the app's access (you can do this on the app's Properties page in the Enterprise Applications blade), you should investigate how the app got there in the first place. A detailed walkthrough of how to fully investigate is coming soon.

You can gather information about the apps in your Microsoft 365 tenant with only a few clicks using the Push platform. See which apps are installed on your tenant, what kind of access they have and if we think any look suspicious. It only takes a few minutes and is totally free! Check it out.