Common ways an app or integration could lead to compromise in Microsoft Azure: consent phishing, unverified apps, hijackable URLs & implicit grant flow.

Common ways an app or integration could lead to compromise in Microsoft Azure: consent phishing, unverified apps, hijackable URLs & implicit grant flow.

With the proliferation of SaaS apps and integrations comes an equal helping of uncertainty surrounding the associated security risks. If you’ve ever found yourself in a position where you’ve had to review a SaaS app integration, whether it’s during the remediation stage of an incident or simply during the process of tending to a user request, then keep on reading.

This article covers common ways an app could lead to compromise in Microsoft Azure, and what to look out for when determining risk to your organization.

Consent phishing

The issue:

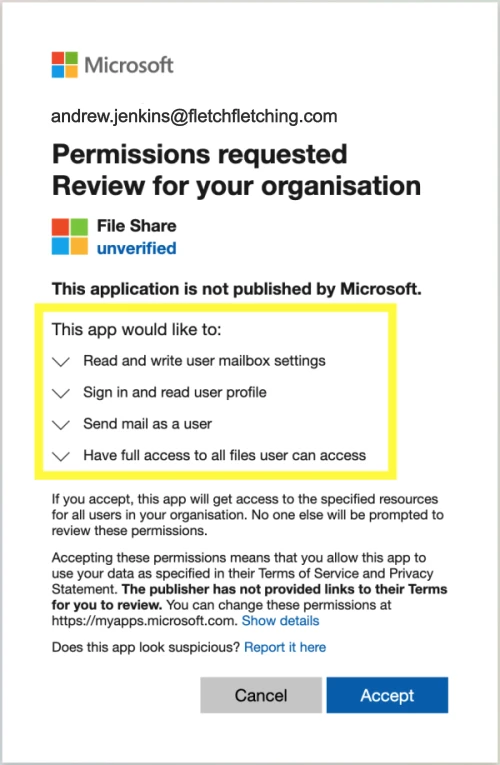

This method of compromising user accounts has been covered a few times by Push. Without rehashing too much of the content, the main idea behind consent phishing is to get a user to perform an integration while the app masquerades as something official.

As an example, a user is sent an email where the content is either surprisingly legitimate, or sparks sufficient curiosity to make them want to access the data behind the link. They are directed to a Microsoft or Google login page, where the app asks for certain permissions, such as mailbox access. The user, having performed these actions before, thinks nothing of it and clicks ‘allow’. The attacker successfully tricked the user to give them access to their mailbox (or whichever privileges the app was requesting).

The solution:

There are two ways to help prevent this type of compromise:

The first is to go the “block everything” route by preventing any integrations from being added to your tenants at all. This is quite heavy-handed and a bit like throwing the baby out with the bathwater, as this approach leads to IT/security departments becoming known as the departments of ‘NO’, potentially resulting in users circumventing controls, and the emergence of shadow IT.

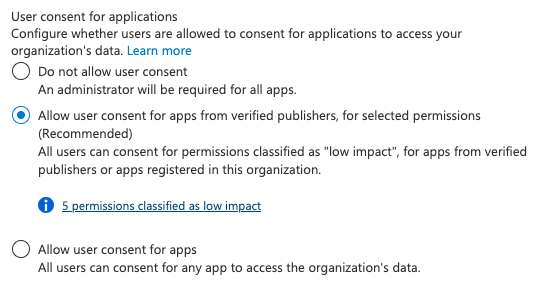

The second is to be sensible about what to allow and what to prevent during SaaS integrations. For instance, in Microsoft 365 administrators are able to specify low-risk scopes, such as ones specifically used for performing social logins (which are okay to do by the way). Admins can then allow employees to perform social logins, and integrate apps making use of other low-risk scopes from verified apps only. Employees can also request access to anything requiring other scopes. This is a great way to enable users to perform their jobs, while preventing them from accidentally exposing themselves or the wider organization to unnecessary risk.

When configuring the above for the first time, Microsoft provides a list of 5 scopes:

profile | View user's basic profile |

openid | Sign users in |

View user's email address | |

User.Read | Sign in and read user profile |

Offline_access | Maintain access to data you. have given it access to (refresh tokens) |

The above scopes are the minimum required to enable social logins to take place, and would cover a good amount of apps that only require basic information for account creation purposes.

If you’d like to go a step further, you should also consider approving the following to allow users to integrate these relatively common scopes from verified apps:

Calendars.Read | Read user calendars |

Calendars.ReadWrite | Have full access to user calendars |

Calendars.ReadWrite.Shared | Read and write user and shared calendars |

Contacts.Read | Read user contacts |

Contacts.Read.Shared | Read user and shared contacts |

Contacts.ReadWrite | Have full access to user contacts |

Contacts.ReadWrite.Shared | Read and write user and shared contacts |

People.Read | Read users' relevant people lists |

Files.Read.Selected | Read files that the user selects |

Files.ReadWrite.Selected | Read and write files that the user selects |

User.ReadWrite | Read and write access to user profile |

We’ve determined these scopes to be relatively low-risk, but this would depend on the risk appetite of your organization. Pre-approving the scopes will go a long way towards enabling your users to make use of SaaS apps without raising unnecessary approval requests from your IT or security team.

Unverified apps

The issue:

First, let’s define what causes an app to be classified as unverified. When you see an app in your tenant that’s marked as unverified, it means that the tenant that publishes the app has not gone through the Publisher Verification process. Going through the verification process requires the publisher to have a Microsoft Partner Network (MPN) account, which typically involves verifying their business address, email address, and a few additional due diligence tasks.

While I’m sure this is not a 100% infallible process, at the very least it provides you with the confidence that someone at Microsoft had reached out to the company and spoken to someone who claims they are who they say they are. This is opposed to a random person creating a Microsoft Azure tenant and marking their app as being published by Adobe, as an example.

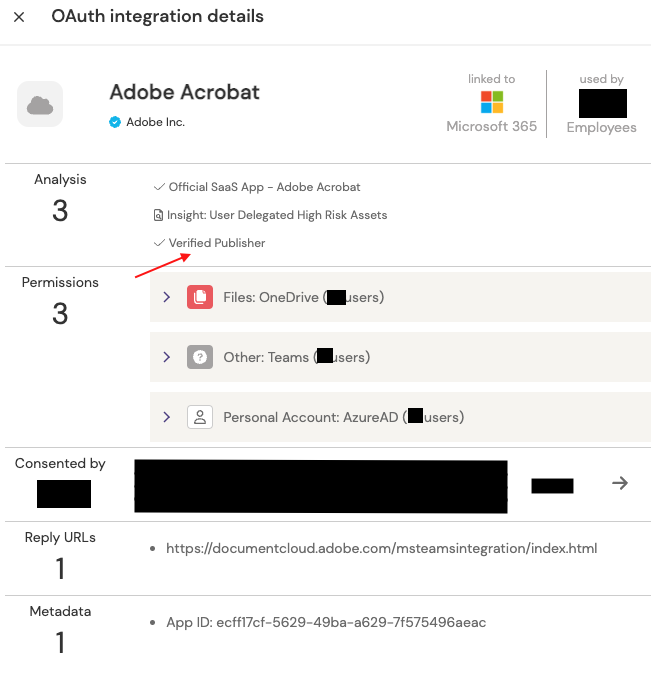

At Push, we’ve noticed plenty of unverified apps published by legitimate vendors. This could be related to vendors having multiple tenants, and not having completed the verification process across all yet. As an example, we have a few of Adobe’s apps for Microsoft 365:

In the above image, we have a verified app from Adobe, Inc. We know this due to the ‘Verified Publisher’ attribute that is included when parsing the information provided by Microsoft. We can also see that the only reply url is one associated directly with Adobe – adobe.com. Next, we have an unverified app:

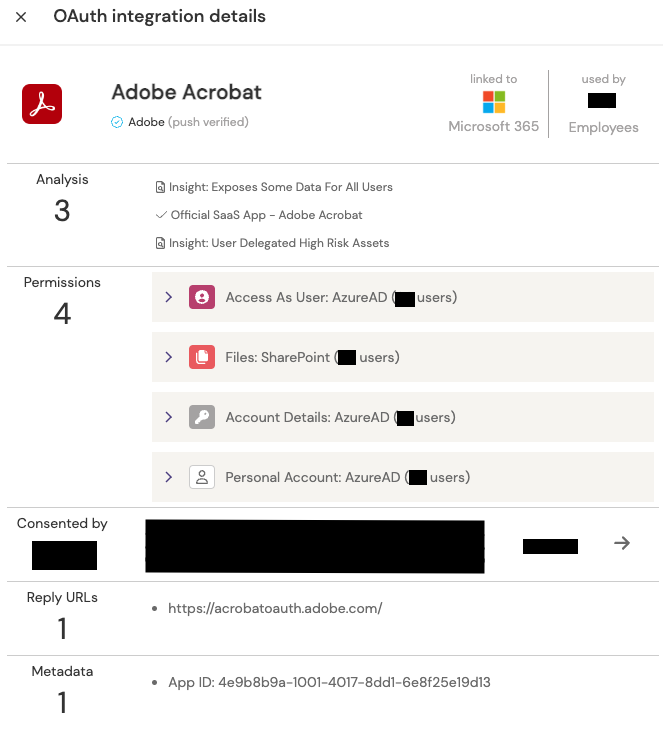

This app does not include the ‘verified publisher’ attribute when reading the information provided by Microsoft. However, the app only has one reply url, and this is again a subdomain of adobe.com.

The takeaway here is that not all unverified apps are malicious. More often than not it’s related to the vendor not having gone through the verification process, but this means it unfortunately becomes the security team’s burden to figure out.

The solution:

At Push, we attempt to review every application we come across to determine if it's legit and whether it belongs to the vendor it claims to originate from. There are multiple ways to do this, but as a general rule of thumb if all the app’s reply urls are associated with the vendor, you are good. You can perform an integration from the app’s website to verify that the particular app ID (seen in the metadata tag above) is the one you are looking at in your environment.

Apps with excessive privileges

The issue:

When you first start doing deep dives on permissions associated with apps in your environment, you find yourself looking at some apps and wonder out loud “we’re granting this vendor access to what?!

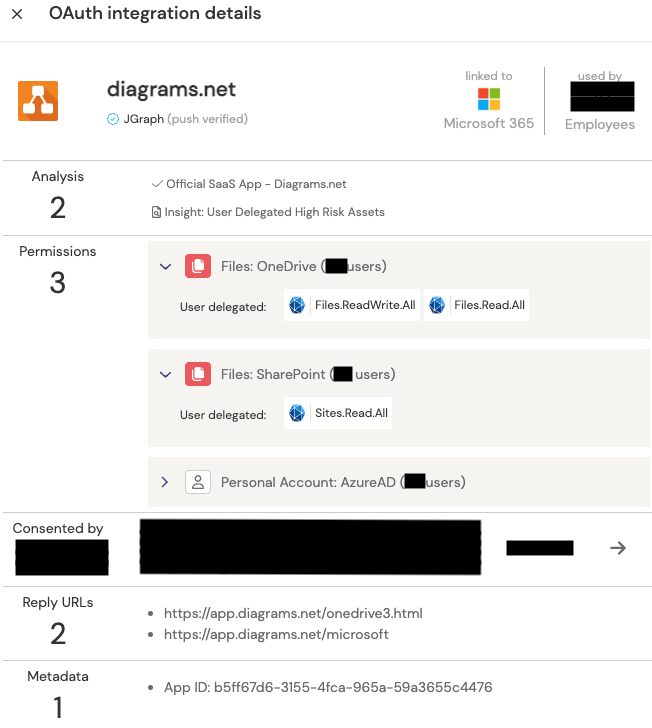

It’s a totally normal response, but don't worry, we’re here to help. Let’s take diagrams.net as an example:

At first glance this doesn’t seem too bad. For the purposes of this example, let’s say the app was approved by 49 users. That means if diagrams.net got compromised, an attacker would potentially have access to 49 of your user’s OneDrive files. “That’s OK!” you say. “This will only affect a handful of files they’ve been working on locally. Our policy specifies that any company data, specifically data containing PII, be stored in SharePoint.”

And then comes the part where you notice the following permission: Sites.Read.All. This permission gives the application the ability to read every file across all SharePoint sites in your organization (that the users have permission to access.) Suddenly the scope of data access is much larger than you hoped.

The solution:

When faced with the dilemma of granting apps access to resources within your organization, the best course of action is to do a risk assessment.

This requires some good ol’ googling and reviewing the security policies of the app’s creator. You ideally also want to know who they use to process your data. Through this process, I found a blog post on diagrams.net detailing their approach to security and user privacy. They do make note that they don’t store any sensitive customer data data on their servers, and thus let you comply with GDPR, ISO 2700* etc. certifications if you use their services.

While this is great from a tick box exercise perspective, this doesn’t address the original concern – how much risk are you taking on by letting their app integrate with your environment? What could an attacker who compromises diagrams.net have access to and how do you lessen the risk while still allowing employees to use the app?

Further in the same blog post, they link to a GitHub repository that contains their security and privacy processes, policies, and even some pentest reports. They do a great job of including this information, by the way, so cheers to diagrams.net!

At this point you should have a better understanding of the security of the vendor you’re integrating into your organization, and whether it’s okay to accept the risk. Documenting and adding the information you found to your risk register is also a good idea. Likely, you’ll be taking this information to your Information Security Manager for risk acceptance.

We’re working on ways to provide this information to our clients through the Push app dashboard in future, too. Sign up or subscribe to our blog to get product updates when features like this are introduced.

Hijackable urls and implicit grant flow

The issue:

Developer side note: The implicit grant flow is no longer recommended due to security-related concerns and that it won’t function where 3rd party cookies are blocked in browsers. Instead, you should switch to using the authorization code flow if applicable to your requirements.

Let’s quickly go over how OAuth2’s implicit grant flow works so you can better understand how to spot potentially risky apps and integrations, and why this can result in a security concern. Microsoft provides a great breakdown of the implicit grant flow, however for the purposes of brevity (and simplicity), it does the following:

A user goes to a web app and clicks a login link

The web app redirects the user to authenticate and authorize the app. This is performed against your identity provider (in this example, Microsoft)

If this is the first time authorizing the app, the user is presented with a list of scopes (permissions) the app will need access to, and the user clicks “approve”

This responds with a token to one of the hard-coded reply urls associated with the app integration (e.g. https://apps.diagrams.net/microsoft as with the ‘Apps with excessive privileges’ example)

The app uses the token to access the user’s resources with the permissions approved in step 3

Based on the flow above, if an attacker gets their hands on the token from step 4, they can perform requests as the user, granting them access to your resources. To get the token, you need to control one of the hardcoded reply url endpoints, and convince a user to authenticate to the app – perhaps via a phishing attack.

As an example, some of the apps we’ve reviewed contained reply urls which were subdomains of azurewebsites.net and ngrok.io. These urls don’t appear problematic at first. However, the urls could have been used during the development process, and were forgotten about at the conclusion of the project. During the review process we follow at Push, we found multiple examples of such urls that were no longer in use.

This could allow an attacker to register the urls and perform phishing attacks against organizations that use these particular apps, granting the attacker access to previously- approved scopes and resources. The outcome of this attack would be similar to redirect URL manipulation, but instead of taking advantage of an open or misconfigured redirect, the attacker is in control of the endpoint where the token ends up.

How would you even go about detecting if an app makes use of the implicit grant flow? This requires getting your hands dirty with making authorization requests to your tenant for the specific app ID, and passing the “response_type=token” parameter in the url. This should return an error if the app is not configured with the implicit grant flow. If you’d like to test this yourself, you can follow the “Run in Postman” link at the top of this article to make this process a bit easier.

Another example of a hijackable url includes dangling DNS records. Let’s say your app includes a reply url pointing to a legacy server used for development (eg. apptesting-dev.ctrlaltsecure.com). This server was hosted on an EC2 instance in AWS, and has long since been decommissioned. However, the IP address associated with the instance is still pointing to the same address. A determined attacker could potentially gain access to the IP address by spinning up resources until it’s assigned to them.

OWASP has published an article and HackerOne posted a guide highlighting ways to take over subdomains , and it’s very easy to overlook.

The solution:

Unfortunately there is no elegant solution to this problem, and it’s not easy to spot as you would need to review each url to see if it’s still in use, in addition to figuring out if the app makes use of the implicit grant flow. Even then, is the active url being used by the developer, or has an attacker already claimed it.

The best course of action here is likely to make use of a proxy that prevents users from accessing unclassified urls, or urls with a low reputation. However, you will risk breaking applications and making your developers angry. This also does not solve the dangling DNS issue, as with the EC2 instance problem above.

Another option is to contact vendors of apps that you’ve noticed including such urls in their apps and ask them to remove the stale entries from their apps.

You think you’ve been compromised. Now what?

Regardless of the method of compromise, there’s a few steps you can take to review what happened and to prevent further access into your environment.

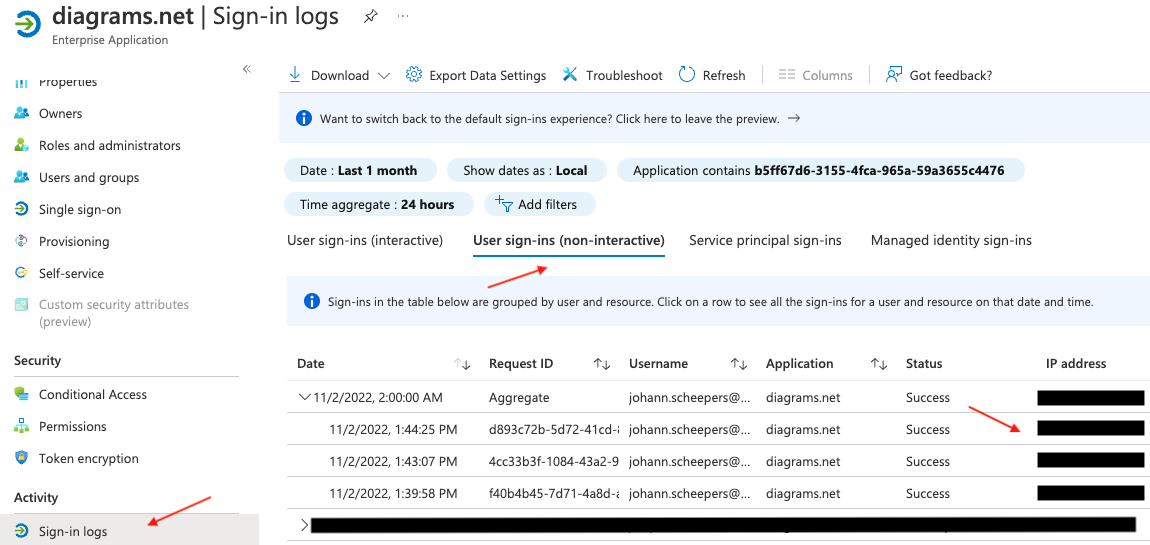

Review app sign-in logs

In Azure Active Directory, head to Enterprise applications and click on the app you want to review. In the new window, click on sign-in logs. You will be presented with a list of user sign-ins (interactive and non-interactive), service principal sign-ins, and managed identity sign-ins.

What you typically need to look for is non-interactive user sign-in logs. Non-interactive sign-ins are related to login events performed on behalf of a user where usernames and passwords were not used (read: tokens). You want to review the sign-ins to determine if there were authentication events from IP addresses unrelated to normal employee activity, which can include discrepancies in geographical locations, and out-of-hours activity. Service principal sign-ins would also be of interest, however it would be more difficult to determine odd behavior as you wouldn’t have user sign-ins to compare with.

You could also review Azure’s risky sign-ins page, as these issues are likely to show up already classified. Just make sure your filters include non-interactive sign-in methods.

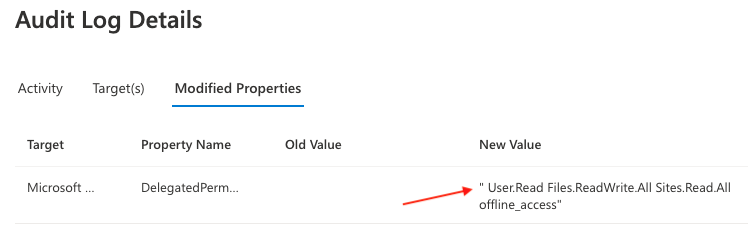

Review app audit logs

In the same window underneath sign-in logs, you’ll find the audit logs section. Audit logs will provide you with crucial information relating to when an app was integrated, by who, and which permissions were delegated.

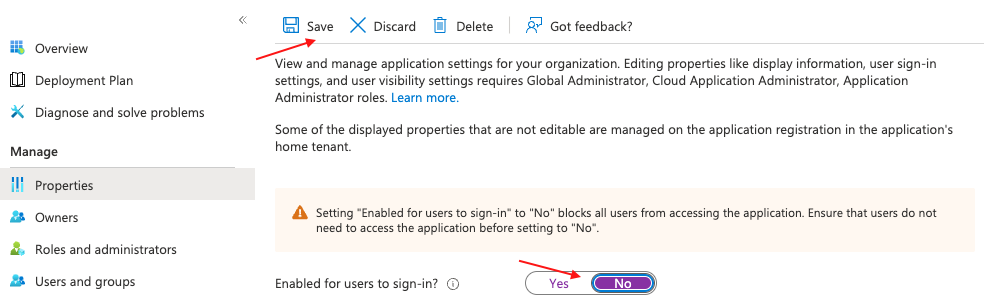

Disable the app

If you’ve determined that an app was involved in an incident, the first step would be to disable the app to prevent malicious actors from performing any further authentication. Under the application’s properties, change the setting “Enable for users to sign-in?” from “Yes” to “No”, followed by clicking “Save.”

Revoke all refresh tokens

Disabling the app is not enough to prevent attackers from maintaining access to your environment. Refresh tokens provide a way for apps to retrieve new access tokens without bugging users with pesky sign-in screens. Tokens are typically valid for between 60 to 90 minutes, and if a refresh token has been issued, the token holder can request new tokens for up to 90 days!

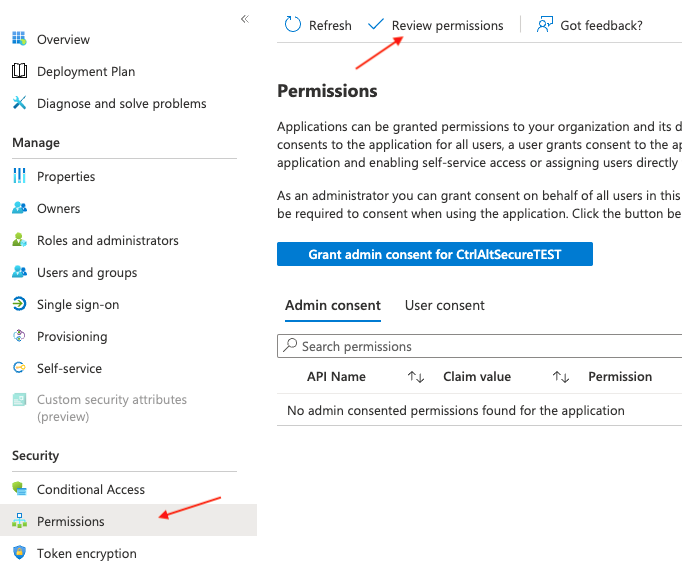

So, revoking refresh tokens is an important step as part of the mitigation and recovery steps. This step can be performed with some PowerShell – luckily Microsoft provides pre-generated scripts for you to copy and paste. Click on ‘Permissions’ for the app, followed by ‘Review permissions.’

In the new window, click on ‘This application is malicious and I’m compromised.’ This will present you with the necessary PowerShell scripts to remove users from the app, revoke all permissions granted to the app, and finally to revoke refresh tokens associated with the app.

What to do if the initial access token was stolen

The initial access token cannot be revoked. In practice, if an attacker has managed to steal an access token it will be valid for the remainder of its lifespan, which is typically one hour. This is true even if the account is disabled, the compromised app deleted, and all refresh tokens revoked. If you’re responding to an incident, you will need to keep an eye on audit logs for an hour or more after performing the above steps to make sure the valid access token wasn’t still being used to perform actions in the environment.

Microsoft’s response to this was to develop something called continuous access evaluation. However, they admit in the article that it does not address a scenario where an attacker exfiltrated the token outside of a trusted network, in which case conditional access policy enforcement would be required to address the issue. Continuous access evaluation is ideal for handling specific cases of user access into the environment such as employee contract termination, or scenarios where conditional access policies are violated.

Conclusion

This article should have given you a better understanding of the most common issues presented when reviewing SaaS apps integrated into your environment.

Determining whether using an app would result in compromise is not a simple task, especially if you haven’t observed malicious behavior. As such, the best course of action is to consider all angles, which include the business case of users requiring its use, the permission scopes, and whether the vendor’s security practices are in line with your requirements.

SaaS is a new(ish) frontier that can be really daunting to defend against attackers, but it's not impossible to reduce risk without simply blocking access to SaaS. And, remember: denying users access to tools will make them find ways around the limitations.

We hope this article helps you get a better handle on how to determine if you’ve been compromised, and respond to incidents involving SaaS apps and/or OAuth integrations to your core work platforms.