Find out if you have any malicious apps that employees have accidentally installed due to consent phishing. Note: you must be logged in to access.

Learn how to quickly detect and mitigate business email compromise (BEC).

Learn how to quickly detect and mitigate business email compromise (BEC).

You get a call from your CFO: “Jenkins! ACME just called to find out why we haven’t paid invoices for the last 3 months? Didn’t you make payment last week?”

You think back a bit. “Yip! I received another invoice a few days ago and made payment yesterday. I also paid the contractor doing renovations on your house. By the way, congrats on the new kitchen.”

Many companies have had similar incidents occur over the last couple of years - it’s a classic Business Email Compromise (BEC) scenario. An attacker managed to gain access to Jenkins in accounting’s email and intercepted email from legitimate creditors, replacing their banking details with the attacker's own, and even forging invoices from non-existent suppliers. Forged emails are then sent from the CEO or CFO to approve the payments.

But how did they manage to gain access to the account? Our security team enforced multi-factor authentication (MFA) a few weeks ago. We’re supposed to be secure!?

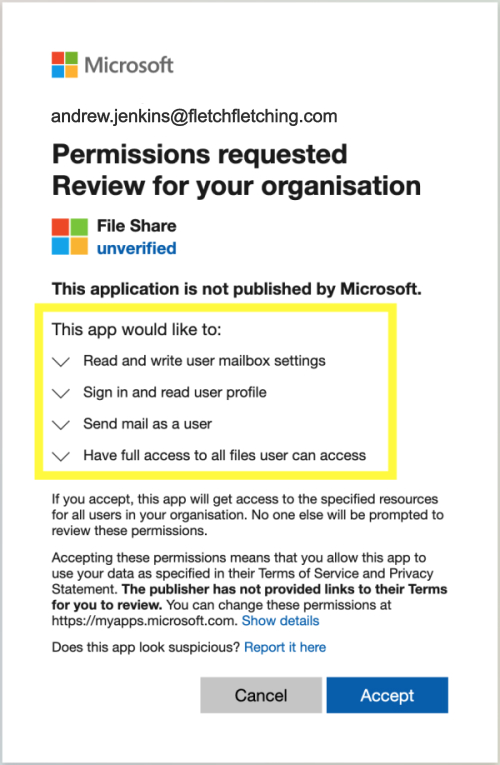

As detailed in our blog post about consent phishing, this attack method will bypass MFA, since the paired malicious third-party integration app (sometimes called OAuth) generates an authentication token. MFA checks are only applied when logging in with your username and password, so in this case, the attacker was able to get a valid access token into Jenkins’ account.

While this isn’t necessarily the same level of access provided with a username/password combo, it might be, based on the scopes Jenkins granted the third-party integration app access to when they clicked ‘Accept’.

The list of third-party integration scopes can include anything from relatively benign things like retrieving your name, surname, and email address, to more dangerous or excessive permissions such as full access to your mailbox, the ability to configure mail rules to forward or delete email, and full access to your OneDrive or Sharepoint files. Worse case scenario: if you belong to groups with password reset capabilities, the attacker may be able to perform full account takeovers.

How do you detect and respond to such incidents?

The main issue is detection. In my experience as an incident responder working with Fortune 500 companies at MWR Infosecurity, I found that BEC attacks are usually detected when associated parties start asking questions about non-payment (or unrecognized payments), which can take weeks or months from the day of compromise. By this point your cloud provider’s logs are likely to have rolled over and you’re unlikely to find much useful information to populate your incident timeline.

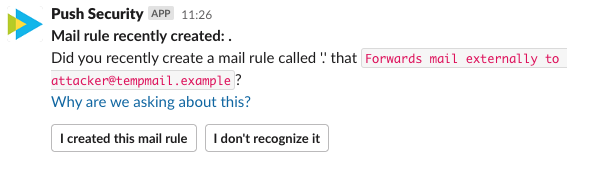

Shameless plug alert: Push’s ChatOps functionality can greatly assist here as it detects such malicious rules when created, and sends a message to the owner of the account (Jenkins) asking if they created the rule. Sometimes a user will have a legitimate use for creating mail rules to forward messages to another account, and this allows them to acknowledge the rule and mark it as safe. In case they didn’t create it, they can flag it as such and this will cause an alert to be sent to their security team. This is practically instant detection and invaluable when preventing fraudulent payments. And getting input from the account owner cuts way down on alert fatigue for your team.

Mitigate the attack

Once you’ve detected the incident, your next step is to remediate. Typically, this would require someone on the security team to find the offending rule in your cloud provider’s control panel to disable it, which can take some time, depending on the team’s availability and other factors.

Detecting the creation of malicious mail rules would require you to configure policies and alerts in your cloud provider’s control panel, and requires someone from the security team to monitor for notifications. If your IT person is also responsible for security in your organization, it’s unlikely that they would spend an appropriate amount of time looking at alerts and, in many cases, would need to follow up with employees to confirm if they had indeed created the rules. If you’re a larger organization, your dedicated security person will likely have higher priority tasks, too.

Discovering a breach is usually related to someone noticing unrecognized payments, vendors querying a lack of payments, or phishing emails being sent to fellow employees or contacts outside of your organization. If an attacker is careful to avoid causing too much interruption, then it’s likely that you won’t discover the breach until all the damage has been done. Usually by this point, performing an investigation will reveal very little due to important investigation artifacts disappearing due to logs rolling over.

If you’re using Push, we would automatically detect the mail rule, talk to the employee whose email the mail rule was created within, and if they didn’t set the mail rule up themselves, we would assume it was created by an attacker and alert your security team. Push’s ChatOps will disable the offending rule and mark it as suspicious.

If this were a typical credential compromise scenario, the account’s password would be reset and everyone would go about their lives. However, since no credentials were compromised in our example, you’d go onto the next step to…

Remove the app’s permissions and revoke the tokens

As I mentioned earlier, third-party integration apps generate tokens, which can be valid for an hour to sometimes 24 hours or more, depending on the integrating app, how it is being used, and if it makes use of refresh tokens.

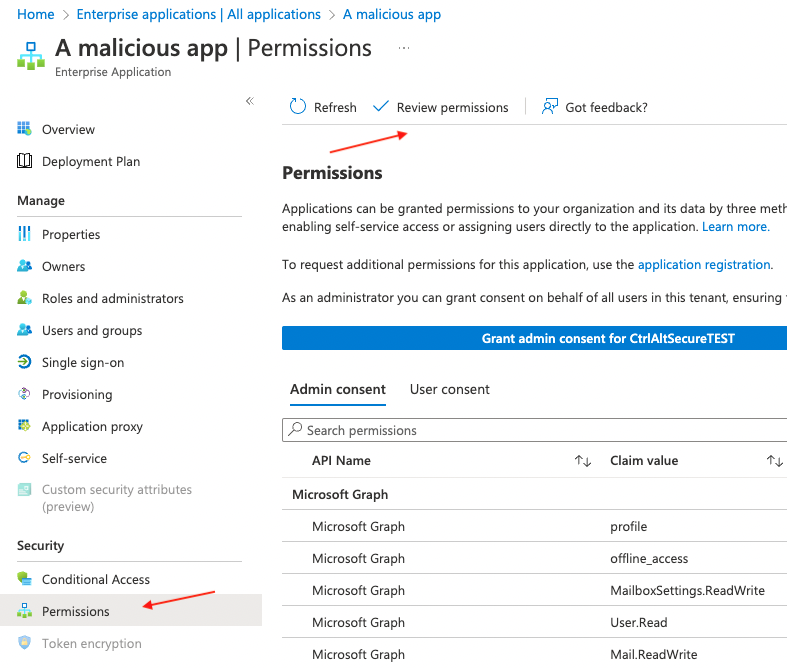

Invalidating third-party integration access permissions requires accessing your cloud provider’s control panel. In this example, you need to revoke access for a malicious app in a Microsoft 365 tenant. Microsoft’s guidance on this is very useful, but unfortunately not as simple as just pressing a button.

To view Microsoft’s recommendations for dealing with a malicious app, you’d need to navigate to the Enterprise applications section in Azure, and locate the app by searching for its name or Application ID, which can be found in the Push app’s OAuth integrations page. In the app menu, click on ‘Permissions,’ then ‘Review permissions.’

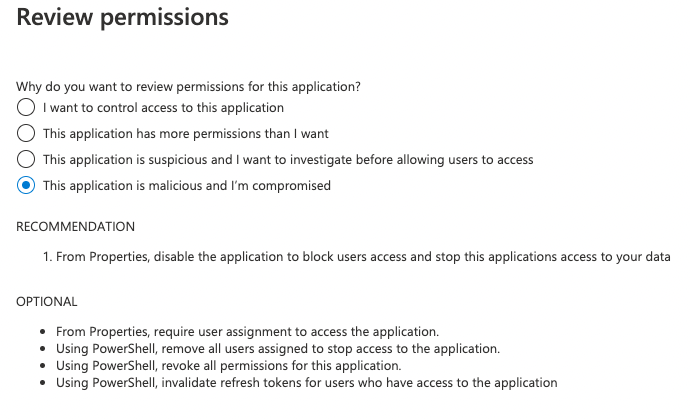

On the slide-out menu, select “This application is malicious and I’m compromised.”

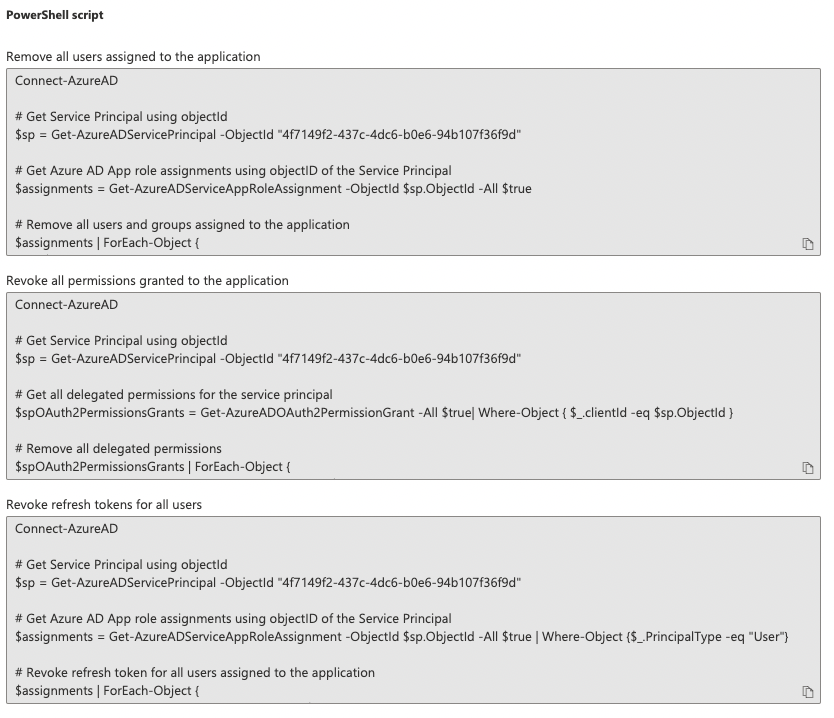

This will provide you with pre-generated PowerShell scripts to 1) Remove all users assigned to the application, 2) Revoke all permissions granted to the application, and 3) Revoke refresh tokens for all users.

How to prevent similar attacks

A very important step following a compromise is to review what happened, how it happened, and what could be done to prevent the incident from occurring again. The interesting part about this incident is that it wasn’t due to a weak password, or even the lack of MFA that led to compromise. It came down to social engineering: instructing an employee to click a link by an account masquerading as their CFO.

For the purposes of this hypothetical incident, we’ll establish that the following occurred:

Andrew Jenkins was targeted in a phishing attack

Andrew authenticated via Microsoft 365, which is a legitimate and expected authentication mechanism and occurs almost daily

No attachments were downloaded, thus in this isolated incident there was no code execution on Andrew’s host, meaning that Anti-Virus or Endpoint Detection & Response (EDR) would not have prevented it

The attacker gained full access to Andrew’s mailbox

The malicious app was disabled by Microsoft after some time, so a full investigation into its capabilities was not possible. We don’t know whether another phishing page was presented after the integration took place, thus to be on the safe side we need to assume this happened and led to credential compromise.

The app was unverified, which has historically been true in most of these scenarios. Publishers need to associate a Microsoft Partner Network (MPN) ID with the app, which follows a verification process, in order to have it appear as a verified app. This Microsoft 365 tenant was configured to allow unverified integrations due to an oversight following an app migration project.

This leads us to the following to help prevent similar attacks from occurring in future, and to make sure there is no opportunity for the attacker to leverage any existing foothold:

Disable the integration and remove the malicious app’s permissions

Reset Andrew Jenkins’ credentials

Be aware of and review newly created mail rules

Confirm that the Microsoft 365 tenant is set to disallow integrations from unverified apps

Note: as of November 9th, 2020, integrations with unverified apps are disabled by default.

Communicate with employees and other affected parties to be weary of these types of attacks

Perform regular audits against your Microsoft 365 tenants to highlight any discrepancies and integrations with unusual or unnecessary permissions.

Microsoft implementing safe defaults towards limiting integrations from unverified publishers was a step in the right direction. However, there have been cases where attackers utilized compromised publishers to perform similar attacks.

Conclusion

While the process isn’t exactly straightforward, catching early indicators like malicious mail rules helps you prevent an attacker from launching additional attacks like phishing campaigns as they try to gain access to sensitive business data. Removing the mail rule is just the start of the process, you really need to revoke permissions and take the other steps we covered in this post to stop an attack from going any further. We’ll publish some more content on SaaS incident response on our blog, so subscribe to get our guidance straight into your inbox.