An employee has added a new integration to your Azure tenant or Google Workspace. How do you assess risk? We’ll cover a few techniques in this article.

An employee has added a new integration to your Azure tenant or Google Workspace. How do you assess risk? We’ll cover a few techniques in this article.

An employee has added a new app-to-app (aka OAuth) integration to your Azure tenant or Google Workspace but you’re unsure of what it is or what risk it poses to your organization. We’ll cover a few techniques to help you assess the risk in this article.

Introduction

There are a few key questions to keep in mind when evaluating an OAuth integration:

Is the source (usually the app vendor) trustworthy?

What can it do if it is not trustworthy? Does it have access to your data? How much access? Does it request more permissions that it should need to function?

What does it actually do (i.e. what do the logs indicate)? Which teams or individuals will be using it and for what purposes?

There are a variety of data sources that can be considered for each of these primary questions, which we’ll break down in this next section:.

Name and Verification Status

Every OAuth integration has a name and both Microsoft and Google verification processes that allow OAuth integrations to be verified as belonging to a particular company. Microsoft has a publisher verification process that’s dependent on its Microsoft Cloud Partner Program, whereas Google has a brand verification process that also has different levels of requirements depending on the level of data access requested.

While being verified does not mean an integration poses no risk – in fact, there have been malicious phishing campaigns using verified publishers – it at least provides some extra assurance around what the integration actually is. This is especially true with Google integrations where access to restricted scopes has been granted.

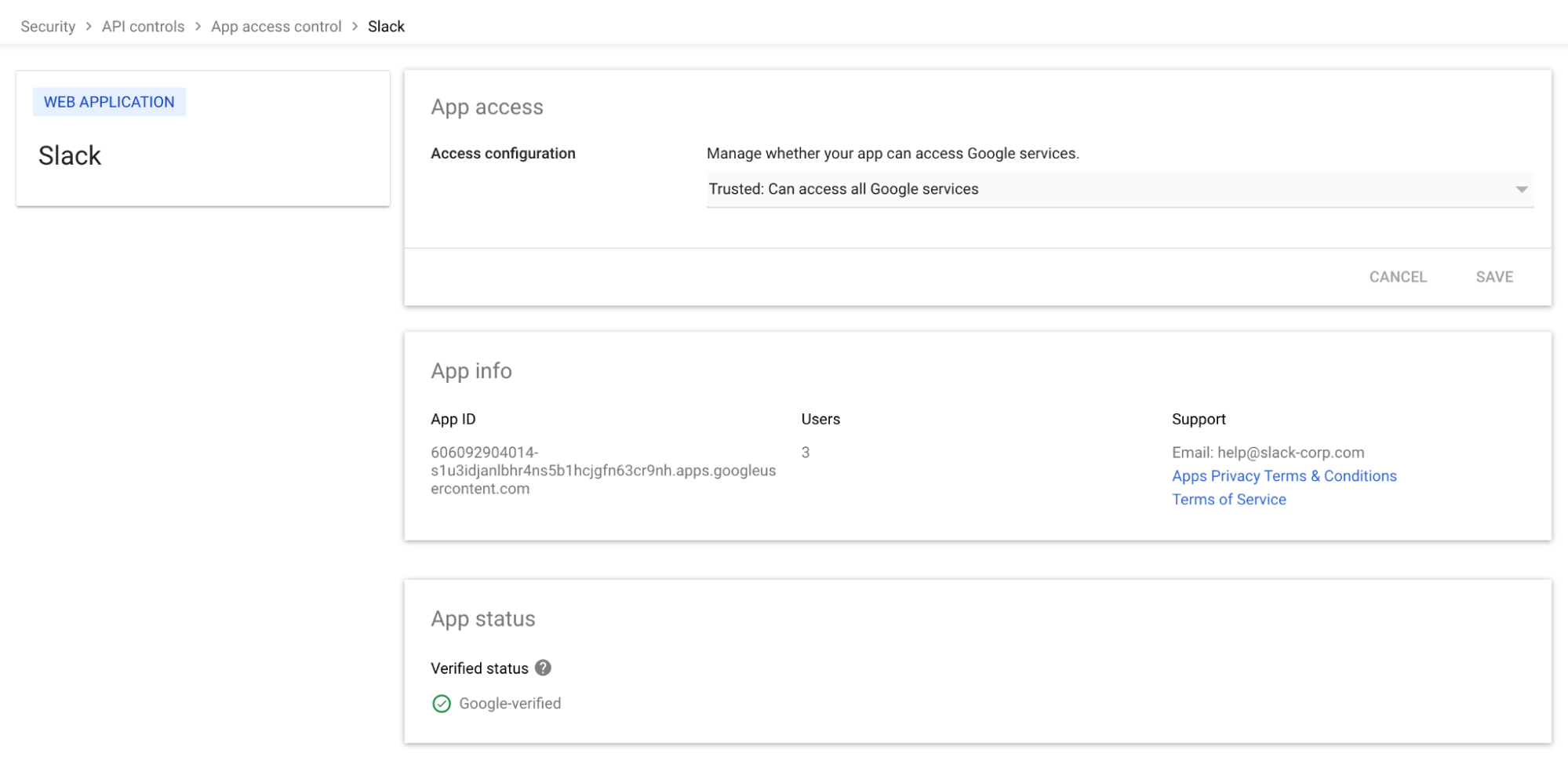

For example, consider the Slack OAuth integration for Google Workspace. The name and icon make it very clear what the integration is claiming to be and the verification status shows that Google has verified this data - so you can quickly ensure the vendor is who they say they are, accept them as a third-party vendor, and move on to more traditional risk assessments. You can start to address questions like, “Should Slack be used within the organization?” Does Slack as a company meet required security and compliance standards?” “Is an OAuth integration required or should it be used purely as a web or desktop app?,” and so on.

Reply URLs and Approved Domains

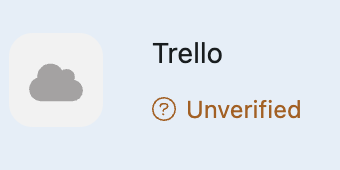

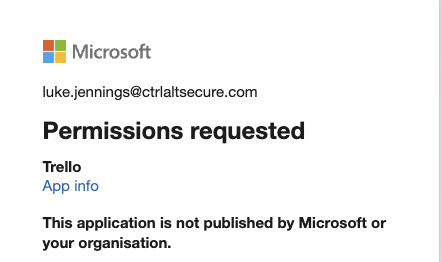

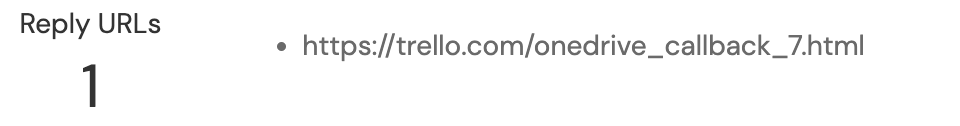

Some integrations may be unverified or have very generic or confusing names that give little indication as to who is actually behind the integration. For example, consider the following Microsoft OAuth integration:

This integration says that it’s Trello, the well known SaaS platform. However, it’s unverified, so how do we actually know it is really Trello and not a malicious app masquerading as Trello? Reply URLs (Microsoft) and approved domains (Google) are other interesting sources of data about an integration as they give authorized callback URLs.

During a common code-based flow for an OAuth consent, once the user has authorized the request, a redirect needs to be made back to a domain/URL that is controlled by the OAuth app vendor to pass the code back to the app. Then the app can use the code to get a token that can be used to act on behalf of the user.

If any domain or URL could be used then there would be nothing stopping an attacker from impersonating legitimate OAuth apps and having the details passed back to a domain they control. This is much less of an issue with code-based flows, since the attacker would need access to the app secrets as well. However, with implicit flows that pass the token back directly, that would mean an impersonation attack would be possible and implicit flows are still somewhat common. To guard against this, the app owner has to specify exactly which domains or URLs are permitted for sending codes and tokens to.

For Microsoft, this is one of the many fields returned from Graph API if you enumerate the service principals for apps installed on your tenant.

In this case, the app has only one authorized reply URL, which points to trello.com. This means that authorization tokens can only be sent to this URL. So, for the integration to be used (or abused) the developer (or attacker) would need control of that domain. In this example, you’d have some assurance that this integration is legitimately associated with Trello. However, there are no guarantees. It’s possible for an attacker to put a range of domains in a malicious integration they control and they only need control of one domain to make use of it. So if attackerdomain.com was also present, then trello.com could just be an effort by an attacker to make their integration appear more legitimate. Therefore, you need to consider all domains present as a whole, as the presence of one known legitimate domain isn’t enough on its own if other domains might be questionable.

One caveat here is that this is much less of an issue when it comes to Google apps that have been through Google brand verification. Part of the verification process involves ensuring that the vendor owns the domains (approved domains) registered in any callbacks. Therefore, if it’s a Google verified app then you don’t have to worry about legitimate domains being impersonated by an attacker to give a fake sense of legitimacy.

It used to be possible to query the approved domains for a Google app via an undocumented API, however, this recently stopped returning this information. However, there are still other details returned by the API that can be of use during an investigation. See an example for Slack below, but you can replace the project ID in the URL with any app project ID:

% curl -H "Origin: https://console.cloud.google.com" "https://clientauthconfig.googleapis.com/v1/brands/lookupkey/brand/19570130570?readMask=*&readOptions.staleness=0.02s&returnDeveloperBrand=true&returnDisabledBrands=true&key=AIzaSyCI-zsRP85UVOi0DjtiCwWBwQ1djDy741g"

{

"brandId": "19570130570",

"projectNumbers": [

"19570130570"

],

"displayName": "Slack",

"iconUrl": "https://lh3.googleusercontent.com/J5SGBWHMF0_vgcIekl1hEhJ1-_p_zsG3L0i1s_bU2bK_TiSLObT7kK1Le9tnme1h3zA",

"supportEmail": "help@slack-corp.com",

"homePageUrl": "http://slack.com/",

"termsOfServiceUrls": [

"https://slack.com/terms-of-service"

],

"privacyPolicyUrls": [

"https://slack.com/privacy-policy"

],

"brandState": {

"limits": {

"defaultMaxClientCount": 36

}

},

"verifiedBrand": {

"displayName": {

"value": "Slack",

"reason": "APPEALED"

},

"storedIconUrl": {

"value": "https://lh3.googleusercontent.com/J5SGBWHMF0_vgcIekl1hEhJ1-_p_zsG3L0i1s_bU2bK_TiSLObT7kK1Le9tnme1h3zA",

"reason": "APPEALED"

},

"supportEmail": {

"value": "help@slack-corp.com",

"reason": "APPEALED"

},

"homePageUrl": {

"value": "http://slack.com/",

"reason": "APPEALED"

},

"privacyPolicyUrl": {

"value": "https://slack.com/privacy-policy",

"reason": "APPEALED"

},

"termsOfServiceUrl": {

"value": "https://slack.com/terms-of-service",

"reason": "APPEALED"

}

},

"storedIconUrl": "https://lh3.googleusercontent.com/J5SGBWHMF0_vgcIekl1hEhJ1-_p_zsG3L0i1s_bU2bK_TiSLObT7kK1Le9tnme1h3zA",

"consistencyToken": "2020-12-04T13:12:40.648327Z"

}

Permissions

Both Google and Microsoft provide a very large number of permissions to give fine-grained control of what level of data access an OAuth integration has. This can be everything from a simple social login to access to high-risk data assets, like document stores and email inboxes, as well as administrative functionality.

It’s worth noting a few differences between how Microsoft and Google handle these permissions. While both have a very large number of fine-grained permissions for users to delegate, Microsoft also has the concept of App Roles, which administrative users can consent to as well. These are often similarly named to delegated permissions, except they give access to data for all users rather than just for the user granting consent.

For example, an ordinary user might be able to consent to grant access to their exchange email inbox using a delegated permission, but an app could also request access to an app role to allow access to all users’ email inboxes and an administrative user could consent to that using the same consent screen. Google does have similar capabilities but they are managed separately using domain-wide delegation.

Another important difference to consider here is that, as mentioned in the section above about verification, Google has different verification requirements depending on the data access requested. Microsoft allows even unverified apps to request access to any data, whereas Google designates some of the most sensitive data sources (such as Google Drive and Gmail) as being sensitive and requiring an app to not just be verified but to have undergone a much more stringent manual security review, including third-party security testing.

Even without good reason to trust an OAuth integration, if the permissions it requests are extremely low risk then arguably it isn’t much of an issue. On the other hand, organizations with a need for a particularly stringent level of security may not be comfortable sharing high risk permissions with even fairly established SaaS vendors. Consequently, one of the most important data sources for evaluating the risk of an OAuth integration is to look at the permissions it exposes.

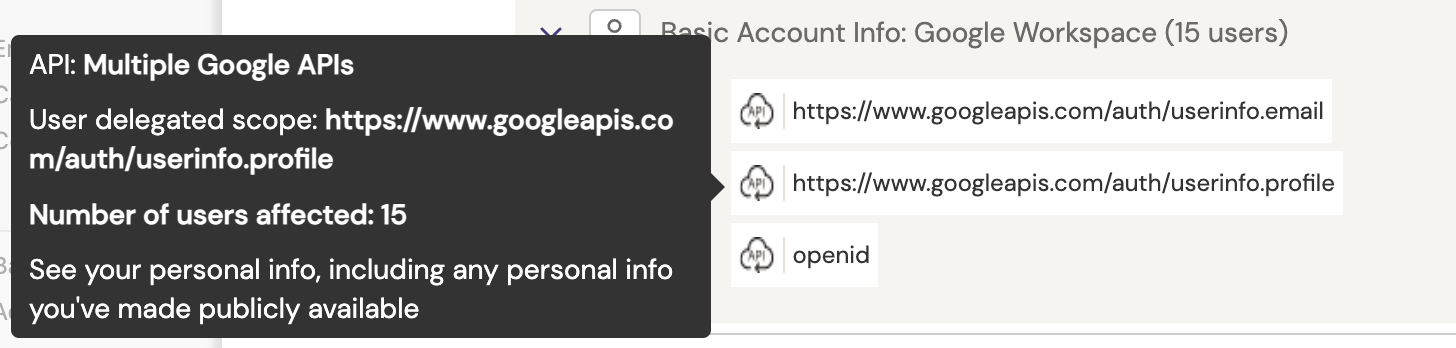

An important factor to consider is that permissions are not necessarily fixed to be the same for every user. If more than one employee makes use of the same SaaS integration, it’s possible they may grant different permissions depending on what the integration does and how they enabled it. For example, let’s consider the Slack integration we saw before:

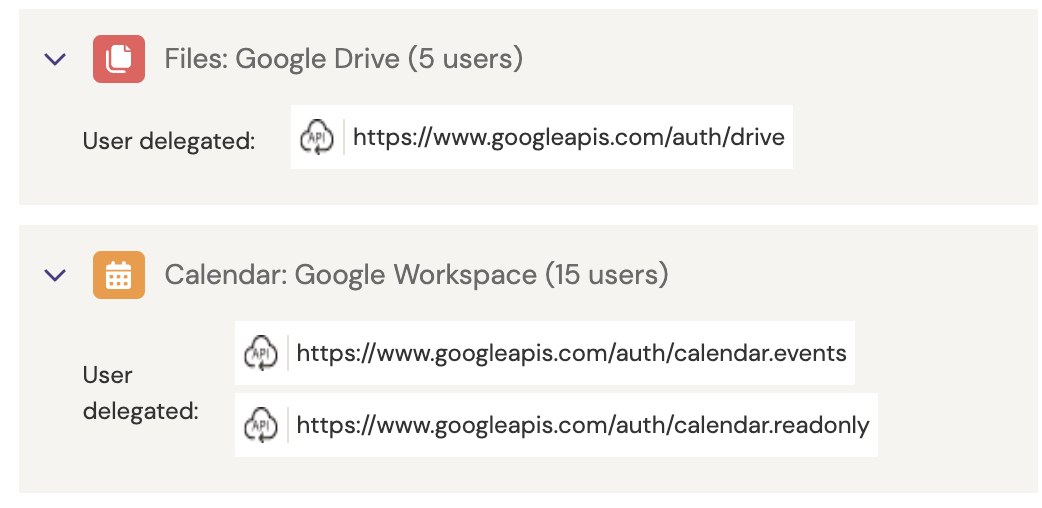

In this particular example, we have 15 users who have granted access to three different very low risk permissions concerning their basic account information, which typically are the minimum required in order to enable a simple social login. However, additional permissions have been granted for some other users:

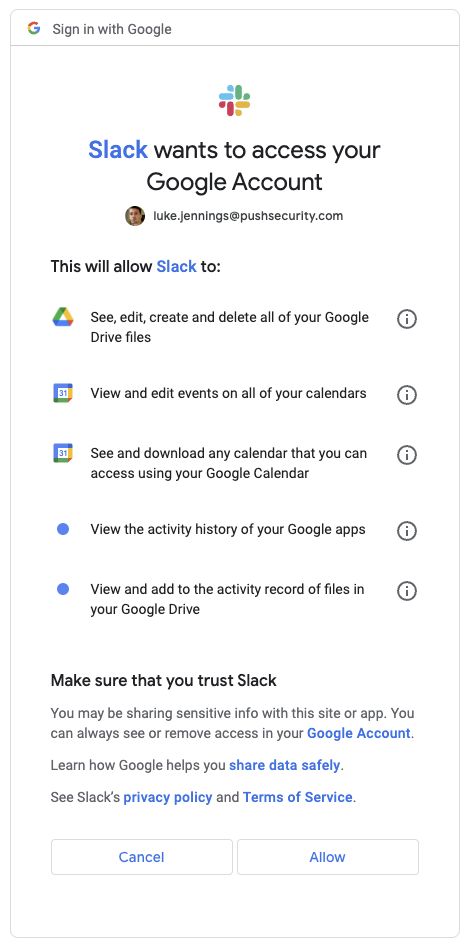

It seems 15 users have also allowed access to their Google calendars and 5 users have also allowed full access to their Google Drive. This is due to different employees adding different Slack apps to enable calendar and file integration. For example, a standard social login to Slack using a Google account won’t even present the user with a consent screen because it only requests the most basic scopes. However, add a sensitive Slack app integration, like the one for Google Drive, and the user will receive a consent screen that looks like this, which is where this difference between users comes from:

Even if Slack is an officially used SaaS provider for an organization though, perhaps enabling complete Google Drive access to a third party would be seen as a compliance risk too far, in which case, you could revoke the file permissions to reduce risk, if desired.

In cases of untrusted OAuth integrations or those that are difficult to verify, the overall risk still remains very low if innocuous permissions like those required for social logins are the only permissions granted. In fact, the majority of OAuth integrations we see at Push do not request anything other than social login permissions. If you want to know more about social login risk then check our previous article here. However, much more careful attention should be paid once you see unknown integrations with high- risk permissions, such as full access to file stores.

Activity Logs

It’s one thing to know what an integration can access in principle, due to its permissions, but it’s another to know what it’s actually doing. In one case, an integration may have requested permissions in order to access a user’s entire file store, but it may only use that functionality when specifically directed to as a result of a user attempting to share a file or some other trigger activity.

That isn’t to say there is no risk, certainly if the vendor is compromised and the tokens stolen then an attacker could arbitrarily access any files they like. However, if an integration constantly accesses all users files and syncs them in their entirety then that is clearly a very different risk profile to observe. Additionally, the ability to determine what an integration has actually done in an incident response scenario is invaluable.

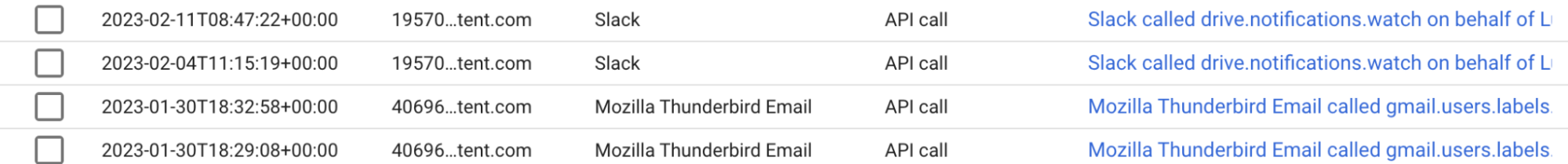

Microsoft and Google offer different options here, which aren’t always available by default. Google provides API call visibility for OAuth integrations, which gives extremely detailed visibility of what an OAuth integration is doing and when. Here you can see the Slack integration using its Google Drive permissions to look for notifications for file changes, while the Thunderbird email integration is accessing some gmail related label data:

The key caveat with Google is that it’s not available on all plans. You can see here that it's only available using Enterprise, Education and Cloud Identity Premium licenses.

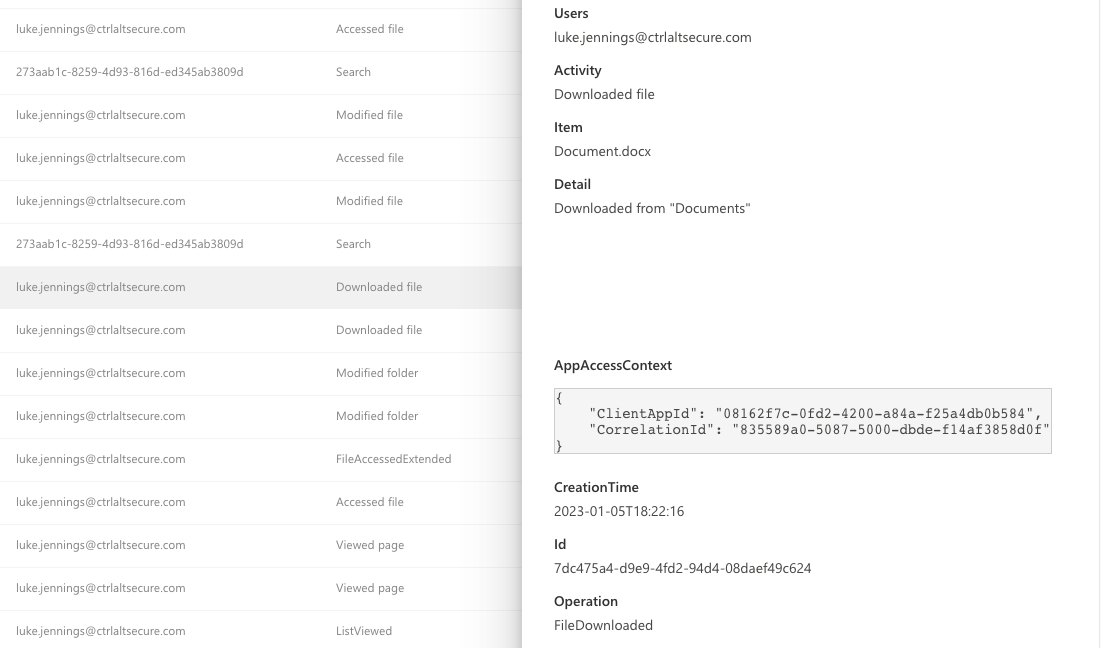

For Microsoft, rather than separate OAuth API call data, detailed audit data available as part of Microsoft Purview often gives context that can be traced back to OAuth integrations when that was the source. For example, here you can see the Mozilla Thunderbird OAuth integration being used to download a file from OneDrive. This is the same event you would get if a file was downloaded from a web interface, but in this case you can see in the AppAccessContext that it specifies a ClientAppId, which refers to the OAuth integration performing the action. This means you can track all activity specifically back to individual OAuth integrations separately from activity performed by a user within web interfaces - a very useful capability!

Conclusion

In this article, we have seen a range of ways that OAuth integrations for both Microsoft and Google can be investigated in order to gain a better understanding of their risk profile, as well as investigating what they actually do in an incident response scenario. While there are no hard and fast rules for when an integration should be considered safe or dangerous, hopefully this gives some idea as to how to perform a risk assessment to make a call depending on your organization’s risk tolerance level.