Computer-Using Agents (CUAs) are a new type of AI agent that drives your browser/OS for you. These tools (or future iterations of them) will enable low-cost, low-effort automation of common web tasks — including those frequently performed by attackers.

Computer-Using Agents (CUAs) are a new type of AI agent that drives your browser/OS for you. These tools (or future iterations of them) will enable low-cost, low-effort automation of common web tasks — including those frequently performed by attackers.

Computer-Using Agents (CUAs) are a new type of AI agent that drives your browser/OS for you. With the research preview release of OpenAI Operator last week, it’s likely that we’ll be seeing a lot more of this technology in the future as OpenAI iterates and competitors launch their own versions.

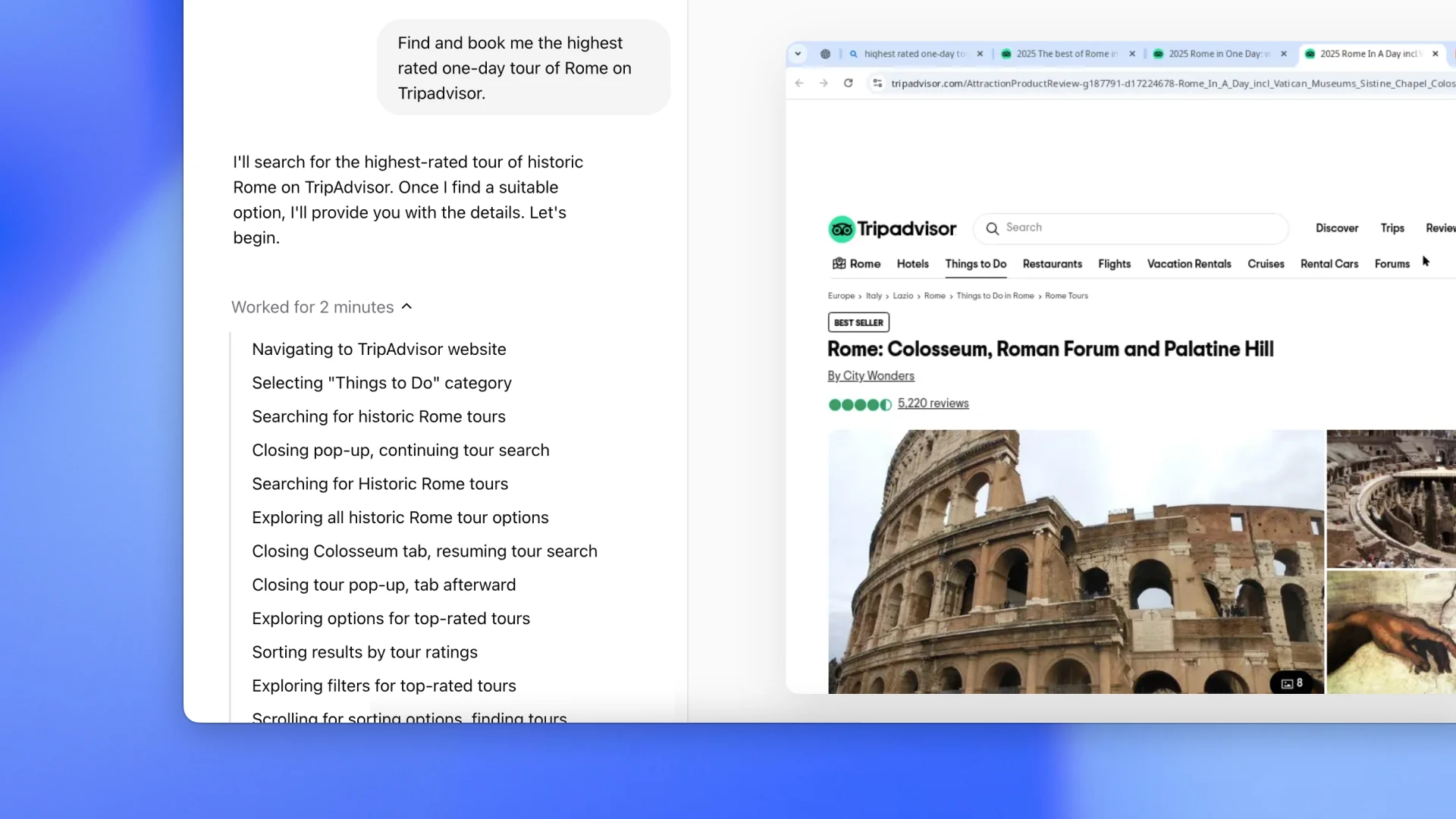

These models run on the same UI as the user sees, rather than using code or API based add-ons or tools (e.g. with access via API keys). In Operator’s case, the agent runs in its own browser, where it can navigate to and interact with webpages by typing, clicking, and scrolling. It effectively sees and interacts with pages as a human would, using human (not machine) identities — taking actions on the web without requiring custom API integrations.

This means that a user describes a task, and Operator performs it autonomously on their behalf. The examples provided by OpenAI are things like booking a dinner reservation or shopping for groceries — but naturally the potential use cases are much, much broader, especially in a work context.

Obviously the broad impact of this technology is almost impossible to predict this early in the game. But since we’re focussed on identity security at Push, we can at least describe some of the very predictable impacts in this area.

CUAs like Operator are essentially very flexible no-code automation platforms. This means that these tools (or future iterations of them) will enable low-cost, low-effort automation of common web tasks — the very tasks that app developers and vendors have worked hard to prevent from being automated — including those frequently performed by attackers.

Why do CUAs stand to benefit attackers more than previous AI tools?

Organizations have been concerned about the security and privacy implications of GenAI tools and platforms for a while now — mainly concerning the risk of inputting sensitive data into LLMs, and prompt injection attacks in which models can be tricked into disclosing internal data.

But so far, the primary impact of GenAI on attacker capabilities specifically has been mainly limited to the use of LLMs for the creation of phishing emails and in AI-assisted malware development — no doubt significant, but not exactly transformative. And although the concept of an AI agent is nothing new, they haven’t been particularly common outside of research circles.

CUAs, on the other hand, use LLMs trained using datasets which make them far more able to understand and interact with web pages. Coupled with what is essentially a production-grade integration between browser and LLM, and you have an agent that is able to understand and interact with websites to achieve an outcome, with minimal human input and oversight (as opposed to simply scraping the data) with much the same behaviors and capabilities as a human operator.

By performing actions autonomously on the user’s behalf, it has a lot in common with a low/no-code automation platform like Zapier or Make.com — except it doesn’t perform actions via API, but by performing actions in the browser as a user would. Unlike no/low-code automations, it doesn’t need a strict or rigid step-by-step description of tasks that should be automated and can dynamically generate steps like a human does.

None of this can’t be done using other automation tools, but it’s the difference between writing code to automate a task by hand and asking a human assistant to do something for you — the effort required is reduced by orders of magnitude. This makes it both more flexible and accessible to a much wider range of users.

How can CUAs be abused by attackers?

There are two main groups of attack to be aware of:

Attacks enabled by the technology (CUA)

Attacks against specific CUA tools/implementations (e.g. Operator)

Because the answer to the latter question is subjective depending on the CUA being targeted (and Operator is still in its “research preview” release) we’ll focus on how attackers can potentially use CUAs for malicious purposes in general.

How attackers can use their own CUAs to conduct AI-powered cyber attacks

The most obvious use-case for an attacker-controlled CUA is targeting internet-based app accounts. Most organizations are now using hundreds of apps, with thousands of sprawling identities (including both inside enterprise SSO connected accounts and local username & password logins) — many of which are highly vulnerable to even low-sophistication attack techniques.

Previously, identity attacks against modern SaaS environments and the sprawl of apps and accounts required a lot of manual work to scale. Because web identities are implemented in mostly bespoke ways across thousands of sites (and they are constantly changing) attacks on them are challenging to automate. Further, the act of logging in using automated methods has been impacted by widespread bot protection — specifically to prevent malicious automation.

So, attackers end up sending phishing links through email, and targeting only a few high value apps for cred stuffing — despite the availability of credentials online (which, as the Snowflake attacks demonstrate, can be an untapped treasure trove for attackers).

The Snowflake attacks saw credentials from infostealer infections dating back to 2020 used to breach ~165 customer tenants, resulting in hundreds of millions of breached customer records — arguably the biggest cyber breach of the year. But the impact could have been significantly worse than this if the attackers had access to a CUA.

We know that about 1 in 3 users re-use passwords, so there is a great chance a lot of those exact same credentials were actually valid for many other apps. It’s very tough to manually test each credential by logging into even a few dozen apps (or building a web automation to do so). But this is significantly easier if you can ask a CUA to:

“Find a list of the top 1000 SaaS apps”.

“Try to login to the app using this username and password. Let me know which apps you successfully logged into”.

“Use takeout services to download data from each app and send it to this location, grouping by company name” (or even just ask the model to cut and paste or download the data from the account).

This is how you really scale these attacks.

CUA agents also change how and where phishing can take place. Where phishing takes place outside of email, it’s much less likely to be intercepted by enterprise anti-phishing controls. You could:

1. Task an agent to create Reddit, Discord, and Slack accounts, login, and find the 100 (or 10000?) biggest subreddits/communities/channels. Now have it join those, and write posts that seem relevant to ongoing threads, or write targeted DMs and include links to a phishing page. If the account gets banned, no problem, automatically start over. Not enough karma? Instruct the agent to build karma.

2. Or consider a more targeted scenario: connect to a specific target (or group of targets) via LinkedIn, read all your target’s posts and comments, and using that context start a conversation with them, using a topic you know that will interest them to create a phishing lure, and direct them to your phishing site.

Operator caveats

Now, it’s worth pointing out that Operator has controls that are designed to prevent this sort of abuse. For example, Operator is trained to proactively ask the user to take over for tasks that require login, payment details, or when solving CAPTCHAs. The Operator System Card also cites proactive refusals of high-risk tasks, confirmation prompts before critical actions, and active monitoring systems to detect and mitigate potential threats.

It’s unclear at this point how resistant Operator will be to attack or abuse, but really, as we said earlier, this is not about Operator — once CUA tech becomes more widely available (if recent trends are anything to go by) there’s no doubt that models will emerge with fewer (or no) safety controls.

Why CUA-based automation is a problem for security teams

Attackers have been using automation tools forever, and in response, developers have been building protections against them (e.g. Cloudflare Turnstile and CAPTCHAs). Using LLMs to super power them isn’t even new, nor is using automation apps for malicious purposes (see our SaaS attack matrix entry for shadow workflows) — so what’s the difference?

Previously, attackers needed to tie together automated browsers, get bot protection bypasses working, write code to extract screenshots from these browsers, pump those screenshots into a traditional LLM, generate response actions, and write code to execute those actions using browser automation. It was a lot of manual work — and needed constant maintenance — and wasn’t very effective because the general LLMs weren’t good at interpreting what they were seeing.

So, this isn’t so much a change in capability but a signal that there is going to be a massive increase in performance compared to other AI agents. Bundle the new model’s ability to understand with the ability to interact with webpages and you have something that might soon create real world impact at scale.

Perhaps the only real obstacles are safety controls and cost. But as we’ve seen after previous GenAI launches, most recently with DeepSeek — competitors have been fast following with models that out-perform the original. Some of these models will be open and contain far fewer safety protections. An open CUA model in the future might be the trigger that enables attackers to leverage these capabilities at scale.

So what?

The TL;DR is that the adoption of CUAs has the potential to significantly lower the cost to attackers of running identity attacks such as phishing and credential stuffing, while increasing their reach.

We can expect improved account takeover attacks in the future as this technology becomes more widespread, with phishing attacks being increasingly delivered outside of traditional (well-protected) mediums like email, and credential stuffing being weaponized on an even more widespread scale, across a broader range of apps. These capabilities will also become more accessible, with even less advanced attackers able to harness them.

Right now, Operator runs in a sandboxed browser environment. But going forward, more value will require an increased ability to perform authenticated access as the user — so one could imagine a world where new features are built to expose passwords into this sandbox — or that these agents will be enabled outside these sandboxes and operate in your browser (primarily) or directly on your OS using agents. We’ve already seen these agents implemented as browser extensions. This makes sense as extensions can see the tab, and interact with the page — and some early extension-based agents have existed for a while:

If we have agents operating on user endpoints, not in sandboxes, that means they will have access to all identities that are already authenticated, or that can be automatically authenticated (password manager autofills etc.). There’s nothing fundamentally stopping you from prompt-injecting a victim's CUA and tricking it into creating a malicious integration, or sending you an API key.

So to summarize...

Organizations should anticipate an increase in identity attacks targeting web-based apps and services using techniques that can be amplified by CUAs such as phishing and credential stuffing. We recommend that organizations:

Anticipate an increase in phishing attacks delivered outside of email, and evaluate your detection capabilities for mediums such as IM platforms and social media sites.

Find and harden identities that could be vulnerable to attacks using techniques that can be automated (e.g. mass credential stuffing) such as those missing phishing resistant MFA (or MFA altogether).

Ensure that all identities are suitably protected — even those outside the scope of traditional identity stores (such as Active Directory and modern equivalents e.g. Entra, Okta) used to access the much broader set of web-based services.

How Push can help

AI-powered or not, identity attacks are what Push is designed to combat. Our features and controls designed to stop account takeover via phishing, credential stuffing, and session hijacking remain effective in this new world — in fact, as attackers are granted the ability to conduct these attacks with greater speed and scale, they become more valuable than ever.

If you're interested in learning more, check out our on-demand webinar where we demonstrate the use of CUAs for automating identity attacks, particularly in the context of SaaS account takeover.

If you’d like to learn more about Push, set up a demo with our team or sign up yourself to have a look at the platform.